Read here: https://medium.com/coinmonks/why-lightning-network-makes-no-sense-39ca172f50d1

Archives For Simonluca Landi

You have just completed the deploy of latest version on your production environment, but something was wrong, and after a few minutes/hours the business guys ask you to revert to the previous version. You was smart, and did a backup of the latest version in production in a tar.gz. Next you manually copied the backup and deployd it to all your servers…. without making mistakes, because you had a perfect documentation for this procedure, and you remembered well all the steps to rollback… isn’t?

Antipattern: use a backup to rollback an application to a previous version.

The recipe: automate the rollback in the same way you automate the deploy

In the last post we see how to use business metrics to validate a deploy, using a mix of software instrumentation and PaaS services. But what to do when a deploy validation fails?

You have at least 2 different aspects to consider:

- Changes at “behaviour” level (your source code)

- Changes at “data” level (your database

Rollback of Source Code

At onebip.com we use a simple trick: every deploy is in different new folder, with a unique generated name (provided by our deployment pipeline tool). After a successful and validated deploy we update a symbolic link “onebip” to the working directory of the latest version of the application. To rollback the code, we just revert the symbolic link to the previous version, a matter of few seconds.

But how to know which is previous version? we create a previousVersion file with a very simple command

<echo msg="Preparing version info file in ${deploy.basedir}" />

<phingcall target="exec-pssh">

<property name="ssh.host.remote" value="${ws.hosts.argument}" />

<property name="ssh.command"

value="echo `readlink ${deploy.basedir}` > ${deploy.basedir.number}/previousVersion" />

</phingcall>

<echo msg="Version info OK!" />

Now the rollback becames easy:

<target name="rollback">

<echo msg="Rollback" />

<exec command="echo '-H ${ssh.username}@${ws.host}'" outputProperty="ws.hosts.argument" />

<phingcall target="exec-pssh">

<property name="ssh.host.remote" value="${ws.hosts.argument}" />

<property name="ssh.command"

value="

cd ${deploy.basedir} &&

PREVIOUS_VERSION=`cat previousVersion` &&

echo PREVIOUS_VERSION is $PREVIOUS_VERSION &&

if [ ! -d $PREVIOUS_VERSION ];

then

echo FAILURE PREVIOUS_VERSION $PREVIOUS_VERSION does not exist, we can not rollback;

exit 255;

fi &&

cd .. &&

rm -fR ${deploy.basedir} &&

ln -s $PREVIOUS_VERSION ${deploy.basedir} &&

ACTUAL_VERSION=`readlink ${deploy.basedir}` &&

if [ $ACTUAL_VERSION != $PREVIOUS_VERSION ];

then

echo FAILURE ACTUAL_VERSION: $ACTUAL_VERSION, PREVIOUS_VERSION: $PREVIOUS_VERSION;

else

echo OK link ok actual: $ACTUAL_VERSION;

fi

" />

</phingcall>

<echo msg="Rollback done" />

</target>

Rollback of Persisted Data

The management of data is a bit harder, because you have to manage the schema changes, in the persisted data and at application level.

A specific post on the subject will coming soon… for now let assume that you can simply run a rollback script and revert schema and data on you database.

Test the Rollback automation

An important point to remember is that the rollback must be tested, as you do with any other feature. The current process thaw we use is very simple: at every deploy in the “Integration Test” environment, we do the following steps:

- deploy new version

- rollback

- deploy new version again

Conclusions

This is a lesson learn from the first “not working” rollback we did in production…. now we want to be sure that rollback works every time, so we automated it and dry run the rollback procedure in deployment pipeline.

What are you thoughts on the subject? How do you manage rollbacks? Let me know 🙂

After you deploy your application on production environment, you should be sure that everything is up an running fine, and that your business still run as expected. You look at you logs and everything is fine: no error is present and the application flows regularly, then you run a manual test and it works as expected.

The day after you receive a phone call: you daily income from is half of the day before, and all the people in the business team is angry with you…

Antipattern: validate your deploy looking at your logs and running a manual test of your application.

The recipe: use business metrics to validate a deploy

At onebip.com we found that a better way to validate if a deploy can be considered successful or not is to measure some business kpi after a deploy for a few minutes (2 minutes is enough): if the kpi values still in the normal range, than the deploy is considered “good”, otherwise an automatic rollback to previous version is run.

Here an example from our phing build.xml file:

<target name="validate-deploy-by-kpi" depends="prepare">

<echo msg="Validation by kpi for deploy ${build.number} started..." />

<exec command="php ${project.basedir}/code/libraries/Onebip/Monitoring/validateDeploy.php" outputProperty="validate.deploy.kpi.output" />

<echo msg="validate.deploy.output: ${validate.deploy.kpi.output}" />

<condition property="validate.kpi.failed">

<contains substring="FAILED" string="${validate.deploy.kpi.output}" />

</condition>

<fail if="validate.kpi.failed" message="Validation by kpi on deploy ${build.number} failed. We should rollback" />

<echo msg="Validation by kpi for deploy ${build.number} completed" />

</target>

The KPIs used for deploy validation are the same that we use for h24x7application monitoring: we instrumented the software using some probes (for the curious people, some details are shared here on DZone) and we send application metrics to Datadog, as SaaS solution born to track metrics and events.

To validate the deploy, we just query the Datadog service (2 minutes after the deploy) using a custom php script able to manage the http authentication and retrieve the most important business metrics, then we check if the last values are inside a predetermined confidence band, and decide for the “go/no-go”.

Here an example: the 2 red vertical bars mark the start/end of the “validation by KPI” step

Conclusions

Instrument your software to track business metrics, and then use the same metrics to validate if a deploy can be considered successful or not.

You deploy the application on your production environment and… surprise: doesn’t work!

Antipattern: start from your dev environment and scale up adding more servers, load balancers, db replicas etc…

While this seems a standard way to design and release a new application, it will just postpone the issues the you will have when you will scale up your infrastructure to production.

Let me clarify: at a first glance, this can be confused with “over design”, and we all know this is wrong, but you know that application will run in a production environment, serving real users, so you have to design starting from your production environment, and solve the issues that arise here first (fail fast and learn fast, as always).

The recipe: scale down from production

Here is a list of design considerations, emerged from experiences at my company.

One of our application, based on PHP+MongoDB, run on “standard” setup for a production environment: one domain name is resolved to 2 different IPs, allocated to 2 load balancers running HAProxy; the load balancers direct the traffic to 2 or more web servers running Apache+PHP, connected to a 3 nodes MongoDB replica set (no need for a shard yet). All the machines are virtual machines, built using RighScale templates.

Using this methodology, can “scale down” the infrastructure simply collapsing the 3 layers (LB, FrontEnd, BackEnd) in less virtual machines, still using the same full stack: for instance, our “Integration Tests” environment runs the full stack on a single machine, but HAProxy and a MongoDB replica set are included, even if not required. The apparently complexity of this setup is simplyfied by using “Server Templates” and virtual machines, than can be instantiated from scratch with just a simple click of a button.

The development environment is a virtual machine too, running on each of our laptop; we are working to have the dev virtual machine built from a RightScale template too, but at least we can share a “master” image to all the evs when something changes in the setup (an article on that coming soon…)

This practice saved our life a few times:

- Starting from v.1.3, the MongoDB driver for PHP requires a different configuration for replica set: we discovered that BEFORE going in production enviroment (yes… we should read the change log…)

- We have cron jobs that must run as “environment singleton” (just job running in a given enviroment): again, we discoverd this need BEFORE going in production and addressed it.

- Because we have multiple front end web servers in production, we need to address the “session management”: the final decision was to design the application “sessionless”. If we would have followed the classic approch, we should have addressed the “session management” in prodution enviroment in some way: storing sessions on DB, using server affinity in load balancer, etc… in summary: a more complex infrastructure.

Conclusions

Starting from design your production enviroment and scale it down is a practice to anticipate the troubles, and solve them at “development” stage.

Usign modern technologies like server virtualization and automated infrastructure provisioning, simplify this practice.

The first problem than you can have after a deployment is that…. the configuration files for your software are somehow wrong: a wrong database password, a wrong connection url, a wrong log file name… more or less every configuration parameter in your application can be wrong.

Antipattern: prepare a configuration file for the production environment, deploy the application and look at the logs.

Well, you know what happen now: the application sometimes fails to start and you have to spend your time looking at your log files, while the application is down and your services not available to users.

We found a simple solution for that: introduce a smoke test stage into the deployment pipeline.

The recipe to smoke test your deployment

First of all, what is a Smoke Test? According to Wikipedia,

a smoke test proves that “the pipes will not leak, the keys seal properly, the circuit will not burn, or the software will not crash outright

The idea is simple: read the configuration parameters and validate them in the actual environment. For instance:

- search all the database connection parameters (hostname, database name, username, password, etc…) and try to make a real connection to that database.

- search all the remote URLs your application uses (APIs, webservices, etc..) and try to connect to them.

- search all the folder names and file names and check the existence and the permissions.

You can just create a script in your preferred language, execute it before going live with the new release (remember the Practice #3: Deploy on the same way from dev to production environment) and abort the build in case of a test failure: your application still at the actual release and continues to be available to your users.

It’s very import to execute the smoke test script in the enviroment where your application run, because you want to be sure that the resources (database, external API, etc..) are available from the same host where the application is installed, so, if you have multiple servers, run the smoke tests from all the servers in the array.

Here some real how-to, taken from our smoke test scripts.

How to smoke test a MongoDB connection

Here an example script:

$ smoke-test-mongo-connection.sh srv1:27017,srv2:27017,srv3:27017

#!/bin/bash

# smoke-test-mongo-connection.sh

#

IFS=',' read -ra mongoConnections <<< "$1"

mongoConnection=${mongoConnections[0]}

mongoOutput=$(mongo $mongoConnection --eval 'status = db.serverStatus(); print(status["ok"]);' --quiet)

if [ "$mongoOutput" != "1" ]; then

echo "MongoDB not reachable at: $mongoConnection"

exit 1

fi

echo "Check configuration OK"

How to smoke test folder permissions

Just run a script like this:

$ smoke-test-log-permissions.sh path/to/my/logs theuser

#!/bin/bash

# smoke-test-log-permissions.sh

#

applicationLogPath=$1

applicationLogPathPermissions=$2

applicationLogPathOutput=$(stat --format=%U $applicationLogPath)

if [ "$applicationLogPathOutput" != "$applicationLogPathPermissions" ]; then

echo "Permissions or owner of file $applicationLogPath are wrong: $applicationLogPathOutput"

exit 1

fi

echo "Check configuration OK"

How to smoke test an URL

You can just execute a script like this:

$ smoke-test-url.sh http://api.exmaple.com

#!/bin/bash

# smokte-test-url.sh

#

statusOutput=$(curl --insecure --silent -o /dev/null -w %{http_code} $url)

if [[ "$statusOutput" == "000" || "$statusOutput" == "40*" || "$statusOutput" == "50*" ]]; then

echo "$url not reachable"

exit 1

fi

echo "Check configuration OK"

Conclusions

As you can understand, I can show here just a few rows of our deployment smoke test script, but I think you can get the idea:

- Parse the configuration files

- Try to instantiate database connections, connect to URLs and writing to files

- fail the build if one resource is not available

I would like to see your comments and thoughts here

This is what we are learning in the Company that I work for, where we are moving from an “svn update /var/www” anti-pattern to a deployment pipeline in 4 different environments, from Dev to Production.

Here you can find real solutions and how-to that worked on our environment.

Practices that we follow for Continuous Deployment

As soon as we feel that we are suffering in some way, we try to find a Practice to mitigate this sufferance. Here what we did until now

Practice #1: Same software stack from dev to production environment

Mitigate: “on my PC works!”

Practice #2: Dedicated Continuous Integration environment for unit test

Mitigate: “on my PC works!”

Practice #3: Deploy on the same way from dev to production environment

Mitigate: deployment to production fails

Practice #4: Smoke test the deployments

Mitigate: major configuration errors

Practice #5: Use Business Metrics (KPI) to validate deploy

Mitigate: deployment to production secceded, but business was impacted

Practice #6: Automate Rollback

Mitigate: production downtime after a failed deploy

Practice #7: Keep deployment pipeline fast

Mitigate: too much time required to go in production

Practice #8: Scale down, not up

Mitigate: “on my PC works!”

(updated soon…)

We found that this is the best way to mitigate the usual impedance mismatch between “devs” and “operations”: deployment to production WILL fail, even if you write the best documentation possible, with a full description of all the steps required, even if the “operations” guys write some script to execute the procedures.

The real solution that worked in our environment was to create a single deploy script that is used to deploy the application in all the environments in the deployment pipeline, from dev to production environment.

The recipe to deploy on the same way from dev to production environment

As our application stack is based on PHP running on Linux, was natural for us to implement the deploy script using phing, scp and ssh

The workflow is:

- build an “artifact” (environment independent)

- copy the artifact to the destination environment in an new folder, let’s say “application.233”

- copy the configuration files required for the destination environment

- setup all the required permissions on destination environment

- run the “migration” scripts required to update the database, if required

- move a symbolic link “application” from “application.232” to “application.233”

- apache will now see the new files and serves the new application

We deploy in all the environment using this same workflow, so even to deploy on “localhost” machine, we run “scp” to copy files and “ssh” to setup permissions, run migrations, etc… and because we have multiple servers on the each environment, we use the “parallel-ssh” and “parallel-scp” utilities.

Let’s see some of the magic that we have in the phing build.xml file…

How to use parallel-scp to copy on multiple servers

Here is the snippet from build.xml

<target name="exec-pscp">

<exec

command="pscp ${scp.host.remote} -O LogLevel=ERROR -O UserKnownHostsFile=/dev/null -O StrictHostKeyChecking=no -O IdentityFile=${ssh.privkeyfile} -t 0 ${file} '${todir}'"

outputProperty="pscp.output"

/>

<echo msg="pscp.output: ${pscp.output}" />

<condition property="pscp.failed">

<contains substring="FAILURE" string="${pscp.output}" />

</condition>

<fail if="pscp.failed" message="Remote copy failed: ${pscp.output}" />

</target>

The property ${scp.host.remote} contains the list of the servers to deploy into, for instance “-H root@server1 -H root@server2”

As you can see, we store the output of the pscp command in the property “pscp.output”, check the result of the operation and fail the build if required.

How to use parallel-ssh to remote execute commands on multiple servers

A similar trick is used to remote execute commands on all the servers in the environment. Here is the snippet:

<target name="exec-pssh">

<exec

command="pssh ${ssh.host.remote} -O LogLevel=ERROR -O UserKnownHostsFile=/dev/null -O StrictHostKeyChecking=no -O IdentityFile=${ssh.privkeyfile} -P -i -t 0 '${ssh.command}'"

outputProperty="pssh.output"

/>

<echo msg="pssh.output: ${pssh.output}" />

<condition property="pssh.failed">

<contains substring="FAILURE" string="${pssh.output}" />

</condition>

<fail if="pssh.failed" message="Remote command failed: ${pssh.output}" />

</target>

Again, the property ${scp.host.remote} contains the list of the servers to deploy and we check the result of the operation looking at the “outputProperty” of command.

The deploy worlflow

here is an extract from the build.xml, showing the main workflow for the deploy.

<target name="deploy">

<echo msg="Copying ${artifact.tgz.path} archive to environment ${deploy.environment} hosts:${fs.host}" />

<phingcall target="exec-pssh">

<property name="ssh.host.remote" value="${fs.hosts.argument}" />

<property name="ssh.command" value="rm -Rf ${fs.deploy.basedir.number}; mkdir -p ${fs.deploy.basedir.number}" />

</phingcall>

<phingcall target="exec-pscp">

<property name="scp.host.remote" value="${fs.hosts.argument}" />

<property name="todir" value="${fs.deploy.basedir.number}" />

<property name="file" value="${artifact.tgz.path}" />

</phingcall>

<echo msg="${artifact.tgz.name} copied to environment ${deploy.environment} host:${fs.host}" />

<!-- we unzip the application artifacts in a new fresh location, -->

<!-- set permissions and and move a symlink to this location. -->

<!-- in this way we have a local backup of previuos version, useful for -->

<!-- a fast rollback (just move the link to the previous version -->

<echo msg="Estracting archive to ${deploy.basedir.number} on host:${fs.host}" />

<phingcall target="exec-pssh">

<property name="ssh.host.remote" value="${fs.hosts.argument}" />

<property name="ssh.command"

value="tar -xzf ${fs.deploy.basedir.number}/${artifact.tgz.name} -C ${fs.deploy.basedir.number};

rm -f ${fs.deploy.basedir.number}/${artifact.tgz.name}" />

</phingcall>

<echo msg="copying configs from configs/${deploy.environment} to ${fs.deploy.basedir.number}/code/configs on host:${fs.host}" />

<phingcall target="exec-pssh">

<property name="ssh.host.remote" value="${fs.hosts.argument}" />

<property name="ssh.command" value="mkdir -p ${fs.deploy.basedir.number}/code/configs" />

</phingcall>

<phingcall target="exec-pscp">

<property name="scp.host.remote" value="${fs.hosts.argument}" />

<property name="todir" value="${fs.deploy.basedir.number}/code/configs" />

<property name="file" value="configs/${deploy.environment}/*.ini" />

</phingcall>

<echo msg="done" />

…. more steps here…. and then

<echo msg="================================================================================" />

<echo msg="Final step: Linking ${deploy.basedir.number} to ${deploy.basedir}" />

<echo msg="================================================================================" />

<phingcall target="exec-pssh">

<property name="ssh.host.remote" value="${ws.hosts.argument}" />

<property name="ssh.command"

value="rm -fR ${deploy.basedir};

ln -s ${deploy.basedir.number} ${deploy.basedir};

" />

</phingcall>

<echo msg="================================================================================" />

<echo msg="Checking link..." />

<phingcall target="check-link" />

<echo msg="Linking OK!" />

<echo msg="================================================================================" />

</deploy>

Conclusions

I can show here just a few snippets of our build.xml, but I think you can get the basics from what I show you:

- Write a single deploy script that can be used to deploy in every environment in the same way

- Start from solving problems related to your production environment early (EG: multiple servers, minimize downtime, easy rollback, etc..)

- Alway check the results of the operations run and fail as required.

Feel free to share your comments and thoughts

Ho ripreso da poco a sviluppare codice utilizzando vim inceve dei soliti eclipse/netbeans. Lasciando perdere le discussioni su cosa sia meglio (vim o IDE), vediamo invece quali plugin ho deciso in installare e quali settings. Per semplificare la gestione dei plugin, ho preparato uno script di installazione che in un colpo solo effettua l’installazione e la configurazione dei plugin per vim che ho scelto, che ora vedimao in dettaglio.

Chi ha fretta, può andare direttamente allo script completo

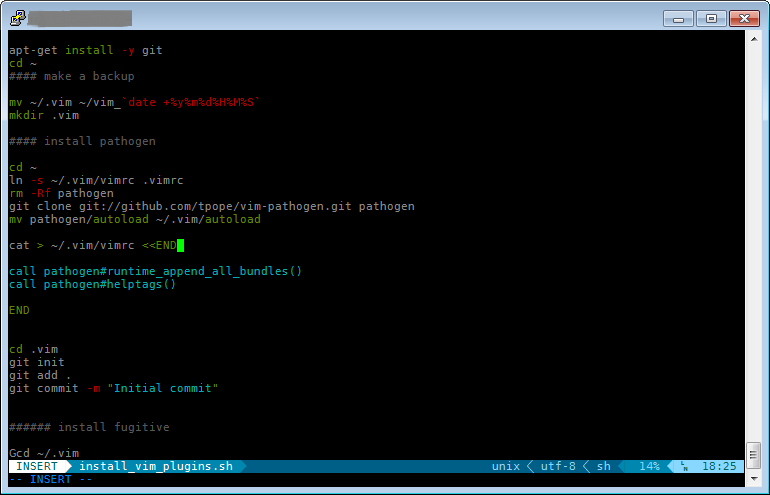

Pathogen

Per prima cosa, ho installato pathogen, che semplifica la gestione dei plugin, assicurando anche l’installazione del client di GIT, ed effettuando una copia dell’eventuale configuazione di vim e relativi plugin già presenti.

#!/bin/bash apt-get install -y git cd ~ #### make a backup mv ~/.vim ~/vim_`date +%y%m%d%H%M%S` mkdir .vim #### install pathogen cd ~ ln -s ~/.vim/vimrc .vimrc rm -Rf pathogen git clone git://github.com/tpope/vim-pathogen.git pathogen mv pathogen/autoload ~/.vim/autoload cat & ~/.vim/vimrc <<END call pathogen#runtime_append_all_bundles() call pathogen#helptags() END cd .vim git init git add . git commit -m "Initial commit" ###### install fugitive cd ~/.vim git submodule add git://github.com/tpope/vim-fugitive.git bundle/fugitive git submodule init && git submodule update

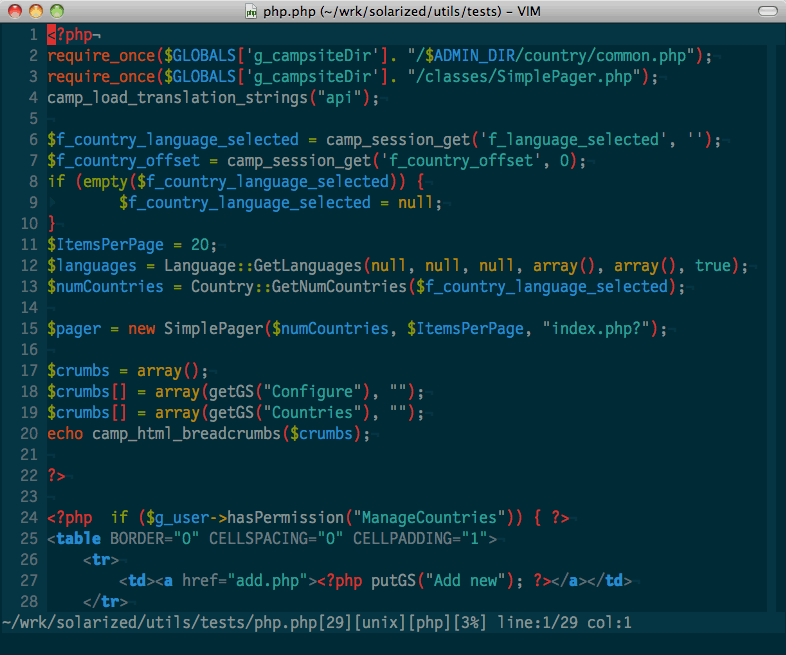

Solarized

Solarized è un plugin che permette di cambiare la palette di colori utilizzata da vim.

Ecco come installarlo:

#### install Solarized cd ~/.vim git submodule add git://github.com/altercation/vim-colors-solarized.git bundle/solarized git submodule init && git submodule update cat >> ~/.vim/vimrc <<END set background=dark let g:solarized_termtrans=1 let g:solarized_termcolors=256 let g:solarized_contrast="high" let g:solarized_visibility="high" colorscheme solarized END echo export TERM="xterm-256color" >> ~/.bashrc

SuperTab

SuperTab è un plugin per vim che aggiunge mappa la funzionalità di autocompletamento sul tasto [TAB] quando si è in insert mode. L’installazione è piuttosto semplice:

### install SuperTab cd ~/.vim git submodule add https://github.com/ervandew/supertab.git bundle/supertab git submodule init && git submodule update

CTRLP

CTLP è un plugin per vim che permette di effettuare ricerche “fuzzy” all’interno di files e del buffer di vim, in modo molto semplice e potente.

Ecco come installarlo:

###### install CTRLP cd ~/.vim git submodule add https://github.com/kien/ctrlp.vim.git bundle/ctrlp git submodule init && git submodule update cat >> ~/.vim/vimrc <<END set runtimepath^=~/.vim/bundle/ctrlp END vim -c ':helptags ~/.vim/bundle/ctrlp/doc|q!'

Syntastic

Syntastic è un plugin per vim che effettua il syntax checking dei files. Supporta praticamente tutti i linguaggi più utilizzati, quali applescript, c, coffee, cpp, css, cucumber, cuda, docbk, erlang, eruby, fortran, gentoo_metadata, go, haml, haskell, html, javascript, json, less, lua, matlab, perl, php, puppet, python, rst, ruby, sass/scss, sh, tcl, tex, vala, xhtml, xml, xslt, yaml, zpt.

Nell’ulizzo con il PHP ho trovato “fastidioso” il fatto che venissero eseguti anche i check di “stile” tramite phpcs, in quanto spesso mi capita di lavorare su codice legacy che non è compliant con standard di “code style”. Per disabilitare l’utilizzo di phpcs, occorre aggiungere nel .vimrc

let g:syntastic_phpcs_disable=1

Ecco come installarlo:

###### install Syntastic to check systax inline

cd ~/.vim

git submodule add https://github.com/scrooloose/syntastic.git bundle/syntastic

git submodule init && git submodule update

cat >> ~/.vim/vimrc <<END

let g:syntastic_mode_map={'mode':'active', 'active_filetypes':[], 'passive_filetypes':['html']}

let g:syntastic_phpcs_disable=1

END

vim -c ':Helptags|q!'

Powerline

Powerline è un plugin per vim che sostituisce la statu line di vim (l’ultima riga in basso per intenderci) con una più accattivamente graficamente e ricca di informazioni, senza tuttavia distrarre l’attenzione. Ecco come appare

Poiche normalmente utilizzo PuTTY come terminale da windows, ho dovuto installare un font patchato. Ecco come fare:

- scaricare il font DejaVuSansMono-Powerline.ttf da https://gist.github.com/1630581#file_deja_vu_sans_mono_powerline.ttf

- installare il font su windows (basdtare fare doppio click sul font)

- Configurare PuTTY in modo da utilizzare il “font DejaVu Sans Mono for Powerline” andando in Change

- settings–>Window–>Appearance–>font settings.

Ecco la porzione di script relativa all’installazione di Powerline:

###### install Powerline # # Remember to download patched font DejaVuSansMono-Powerline.ttf for windows # from https://gist.github.com/1630581#file_deja_vu_sans_mono_powerline.ttf # and install in in windows, then select "DejaVu Sans Mono for Powerline" in PuTTY # (Window-->Appearance-->font) cd ~/.vim git submodule add https://github.com/Lokaltog/vim-powerline.git bundle/powerline git submodule init && git submodule update cat >> ~/.vim/vimrc <<END let g:Powerline_symbols='fancy' END

Altre Personalizzazioni

Ho poi effettuato un aseri di personalizzazioni del .vimrc:

###### configure .vimrc with custom settings cat >> ~/.vim/vimrc <<END set expandtab tabstop=4 shiftwidth=4 softtabstop=4 set incsearch set hlsearch set ignorecase set smartcase set encoding=utf-8 set showcmd set comments=sr:/*,mb:*,ex:*/ set wildmenu set wildmode=longest,full set wildignore=.svn,.git set nocompatible set laststatus=2 END

Script completo

Ecco di seguito lo script completo, che effettua tutte le operazioni che ho descritto.

#!/bin/bash

apt-get install -y git

cd ~

#### make a backup

mv ~/.vim ~/vim_`date +%y%m%d%H%M%S`

mkdir .vim

#### install pathogen

cd ~

ln -s ~/.vim/vimrc .vimrc

rm -Rf pathogen

git clone git://github.com/tpope/vim-pathogen.git pathogen

mv pathogen/autoload ~/.vim/autoload

cat > ~/.vim/vimrc <<END

call pathogen#runtime_append_all_bundles()

call pathogen#helptags()

END

cd .vim

git init

git add .

git commit -m "Initial commit"

###### install fugitive

Gcd ~/.vim

git submodule add git://github.com/tpope/vim-fugitive.git bundle/fugitive

git submodule init && git submodule update

#### install Solarize

cd ~/.vim

git submodule add git://github.com/altercation/vim-colors-solarized.git bundle/solarized

git submodule init && git submodule update

cat >> ~/.vim/vimrc <<END

set background=dark

let g:solarized_termtrans=1

let g:solarized_termcolors=256

let g:solarized_contrast="high"

let g:solarized_visibility="high"

colorscheme solarized

END

echo export TERM="xterm-256color" >> ~/.bashrc

### install SuperTab

cd ~/.vim

git submodule add https://github.com/ervandew/supertab.git bundle/supertab

git submodule init && git submodule update

###### install CTRLP

cd ~/.vim

git submodule add https://github.com/kien/ctrlp.vim.git bundle/ctrlp

git submodule init && git submodule update

cat >> ~/.vim/vimrc <<END

set runtimepath^=~/.vim/bundle/ctrlp

END

vim -c ':helptags ~/.vim/bundle/ctrlp/doc|q!'

###### install Syntastic to check syntax inline

cd ~/.vim

git submodule add https://github.com/scrooloose/syntastic.git bundle/syntastic

git submodule init && git submodule update

cat >> ~/.vim/vimrc <<END

let g:syntastic_mode_map={'mode':'active', 'active_filetypes':[], 'passive_filetypes':['html']}

let g:syntastic_phpcs_disable=1

END

vim -c ':Helptags|q!'

###### install Powerline

#

# Remember to download patched font DejaVuSansMono-Powerline.ttf for windows

# from https://gist.github.com/1630581#file_deja_vu_sans_mono_powerline.ttf

# and install in in windows, then select "DejaVu Sans Mono for Powerline" in PuTTY

# (Window-->Apperence-->font)

cd ~/.vim

git submodule add https://github.com/Lokaltog/vim-powerline.git bundle/powerline

git submodule init && git submodule update

cat >> ~/.vim/vimrc <<END

let g:Powerline_symbols='fancy'

END

###### configure .vimrc with custom settings

cat >> ~/.vim/vimrc <<END

set expandtab tabstop=4 shiftwidth=4 softtabstop=4

set incsearch

set hlsearch

set ignorecase

set smartcase

set encoding=utf-8

set showcmd

set comments=sr:/*,mb:*,ex:*/

set wildmenu

set wildmode=longest,full

set wildignore=.svn,.git

set nocompatible

set laststatus=2

END